Image by Ethan Harris

Twitter’s ban of former president Donald Trump has sparked a national debate about online censorship. The president’s supporters rallied to his side, asserting that social media platforms should not censor conservatives for holding certain beliefs. From the printing press to Facebook, technology has always molded how society understands freedom of speech, and this debate is only the most recent example. Free expression exists to protect and serve democracy, so limiting it can certainly be dangerous.

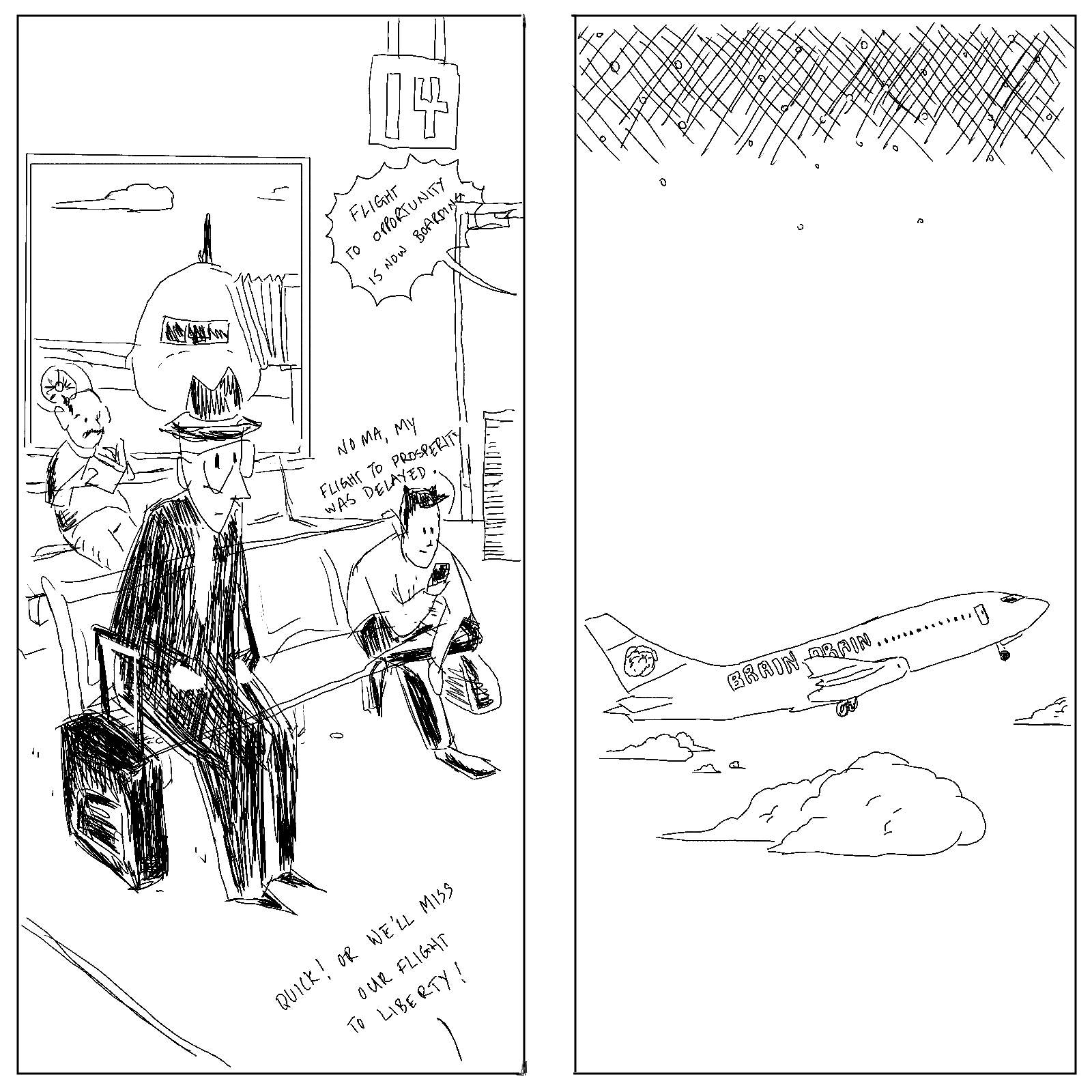

But regardless of whether Twitter is censoring Trump, this is not the threat to free expression that the president and his supporters claim it to be. Rather, the most serious danger to free expression online comes from the algorithms behind social media platforms. While social media is sometimes viewed as the modern “public square” for free expression, its algorithms actually work to undermine democratic culture by separating those with opposing views into “echo chambers” that increase polarization, devalue and opinionize truth, and pressure users to hold and act on increasingly radical beliefs.

The Business of Algorithms

Algorithms dictate how platforms like Facebook, YouTube, and Twitter function. While the specifics of each algorithm are not public, they all fulfill one purpose: to maximize user engagement for profit. In order to capture user attention, algorithms hyper-personalize experiences to best fit each user’s content preferences.

While giving customers what they want makes sense for any business, social media platforms are in a unique position. They are the modern marketplace of ideas, and their enormous cultural and political influence means they must balance their private interests with free speech concerns.

When platforms change their algorithms, such as when Facebook and Twitter shifted from a “chronological” timeline to one that prioritizes user preferences, that has tangible ramifications for public discourse. Crucially, when algorithms show users content they are likely to agree with, they create problematic “echo chambers,” or digital environments that reflect and “echo” users’ existing views.

The Problem

The dangers echo chambers pose to democracy are reflected in philosopher Hannah Arendt’s 1967 essay “Truth and Politics,” where she outlines the conflict between factual truth and opinion. Factual truths are the hard, historical facts that must be universally agreed upon in order to have genuine political discourse. This would include statements like “9/11 was a foreign attack on the World Trade Center in New York City on September 11, 2001.” Universal agreement on these facts is the cornerstone of public debate.

Arendt points out that evaluating others’ opinions when forming our own is “the hallmark of all strictly political thinking.” When echo chambers continually show people content they already agree with, they restrict those same users from seeing and understanding others’ views. In doing so, echo chambers leave the wider electorate less informed, less willing to accept opposing ideas, and more polarized.

Echo chambers limit the scope of political views that users see online, warping their understanding of truth. In the New York Times’ “Rabbit Hole” podcast, former Google engineer Guillaume Chaslot explains how the YouTube algorithm recommends users personalized content to maximize watch time and ad revenue. In the aftermath of a protest in Cairo, Chaslot witnessed that users who first watched pro-protester content would only be recommended similar videos. Likewise, users who first watched pro-police videos would only receive similarly reinforcing suggestions.

The algorithm, Chaslot states, created “two different realities.” Though these users witnessed the same event, they absorbed two radically different interpretations of the facts, thus shaping their understanding of the protest. In other words, algorithms condition users to view truth through polarized lenses. When two individuals have wildly divergent ideas of factual truth, it becomes increasingly difficult for them to consider opposing perspectives.

Further Threats to Free Expression

Not only do algorithms sort users into opposing ideological realities, but they also shift which views are considered acceptable within each echo chamber. This shift occurs partly because of the effects of grandstanding. Grandstanding, as described by Justin Tosi and Brandon Warmke in Moral Grandstanding as a Threat to Free Expression, is “the use of moral talk for self-promotion.” One type of grandstanding is “ramping up”—trying to one-up an opponent in a moral arms race.

When applied to online echo chambers, “ramping up” accelerates radicalization and stifles the expression of moderating viewpoints. Critically, social media platforms have financial incentives to promote “ramping up” content that is often sensational and false. In turn, digital creators, to compete for user attention, must appeal directly to the algorithm’s preference for clickbait. The algorithm creates a feedback loop where users are recommended increasingly hyperbolic content, forcing creators to “ramp up” their content ad nauseam, all of which comes at the expense of truth.

Importantly, grandstanding can censor free expression from content creators, pundits, and political “influencers” who mold public perceptions. For instance, when Georgia Secretary of State Brad Raffensperger dismissed lies about election fraud in his state, many of the loudest voices in the Right’s echo chamber called him a RINO (Republican in Name Only). Despite voting for Trump, Raffensperger has already been personally and politically threatened online. The right-wing echo chamber deemed Raffensperger’s views—accepting the apolitical fact that Joe Biden won the election in Georgia—unacceptable.

In presenting Raffensperger with a choice between truth-telling and keeping his job, Trump’s supporters chose “ramping up” over the truth. Echo chambers feed off partisanship, and by making truth a partisan affair, they threaten democracy. As Arendt argues, freedom of expression is meaningless if the facts themselves are up for debate.

The Future of Algorithms

One thing is clear: we must dismantle broken algorithms. Social media companies cannot posture as free and open public squares while simultaneously exploiting their users’ attention for profit. If we believe that social media is the modern marketplace of ideas, we should hold these platforms to higher standards that promote democracy and truth-telling.

We should not reward companies that exploit users engaging in democratic discourse. Unfortunately, as more join the internet, especially those over fifty years old, the myriad ways in which algorithms threaten free expression is likely to worsen. As such, we should regulate bad actors like Facebook and Google, which have shown little ability to effectively manage misinformation on their platforms.

There are many ways for governments to regulate social media platforms. While no single solution will fix the entire problem, a multipronged approach from the federal government and the companies themselves could prove promising. The government has already shown its willingness to investigate the monopoly power of Google, Amazon, Apple, and Facebook, and for good reason. Congress could break up Facebook from its subsidiaries, including Whatsapp and Instagram, forcing the platform to adopt more consumer-friendly business practices in order to remain competitive.

Additionally, social media platforms should seek to strengthen the influence of traditional, reputable media sources like the New York Times, Wall Street Journal, and the Associated Press. One simple private solution would be an additional and exclusive verification badge that would designate and algorithmically reward trustworthy sources of factual truth. Any publication could apply for a review by an independent body of historians, constitutional scholars, and journalism professors that would determine which publications are eligible for the badge. Only straight news (non-opinion) pieces from those sources would receive the badge.

Twitter and Facebook have recently—and encouragingly—responded to the pandemic and the 2020 election by including warning labels that link to relevant informative sources. These platforms could and should look to implement this feature for more topics.

It’s high time that platforms take their social responsibility seriously. But given the way social media companies have mishandled their immense public influence, the government must step up to ensure that corporate decisions are aligned with an interest in truth and democracy.